Chapter1 Signals and Systems

Introduction

- 已知输入和系统,求输出:输出预测

- 已知输出和系统,求输入:逆向问题

- 已知输入和输出,求系统:系统分析(与设计)

Mathmatical definition of signal

-

A signal is a mathematical function that is created to transmit information.

-

Function is a mathematical entity that includes

- independent variables

- dependent variables

- mapping that describes their relationship

-

All signals studied in this course are representable with the use of functions

Signal types

- Continuous-time signals

- The underlying signal has a value at every point in time.

- Discrete-time signals

- The underlying signal has values only at a discrete set of time points.

Examples:

- Continuous-time signal example:

- speech signal

- painting : continuous-time two-dimensional signal

- Discrete-time signal example

- stock market index: discrete-time one-dimensional signal

- Digitalized picture is a discrete-time two-dimensional signal

| Signal Type | Domain | Multi-Dimensional | Value Type |

|---|---|---|---|

| Continuous-Time | Continuous | Yes (images, videos, etc.) | Real or Complex |

| Discrete-Time | Discrete | Yes (digitalized images, videos, etc.) | Real or Complex |

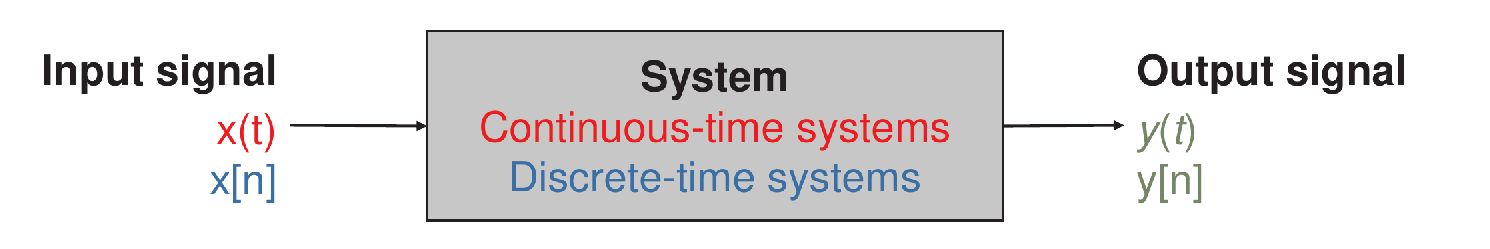

System: signal processor

- A system is a signal processor that maps a function (input signal) intoanother function (output signal)

System properties:

- Linear / non-linear

- Time-invariant / time-variant

Interconnections of systems

System interconnection: connecting small systems to generate large, complicated systems

-

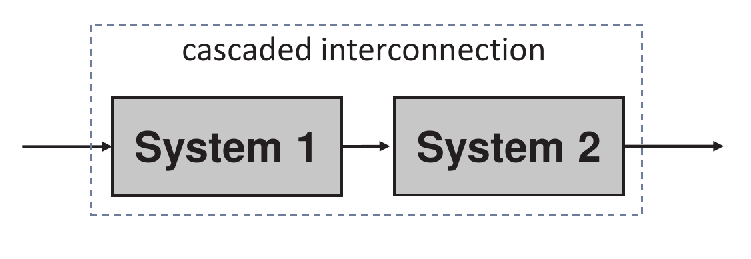

Series (cascaded) interconnection 串联/级联

![image-20250221173459864]()

-

Parallel interconnection 并联

![image-20250221173519736]()

-

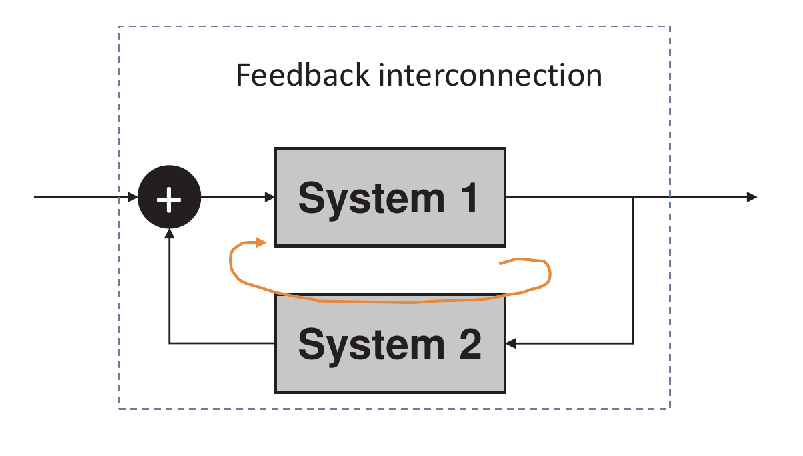

Feedback interconnection 反馈

![image-20250221173538715]()

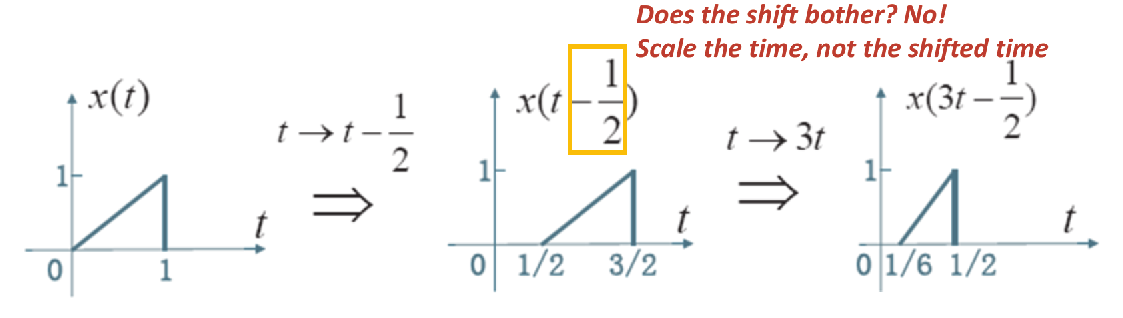

Transformations of independent variable

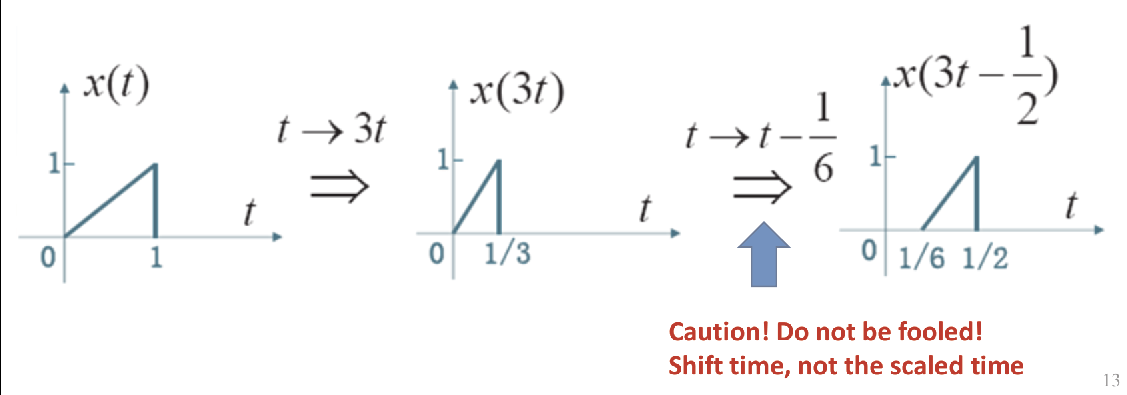

Time Scaling 尺度变换

Intuitively, this transformation squeeze or stretch the original signal

- , “Squeezing”

- , “Stretching”

Important Notice: When we squeeze or stretch by applying , we squeeze

or stretch the signal about

Time Shifting 时移

Time Shift for continuous-time signals

- , right shift or delay the signal 延迟

- , left shift or anti-delay the signal 提前

Time Shift for discrete-time signals

- You cannot always do time-scaling in the time domain for discrete-time signals because it can produce some undefined situations

Notice

- You should always scale the time ( itself ), not the shifted time !

- Identically, you should always shift the time ( itself ), not the scaled time !

Time Reversal 时间反转

Continuous-time (CT) sinusoidal signals

Definition: A sinusoidal signal is any signal whose function is derived from a standard cosine function [cos(t)] through:

1. **Magnitude scaling** (amplitude adjustment)

2. **Time scaling** (frequency adjustment)

3. **Time shifting** (phase adjustment)

- A amplitude (=magnitude scaling)

- frequency (=time scaling)

- phase (=time shifting of )

From trigonometric identity(三角恒等式), we know

- So sine function is just a phase change of cosine function

- For any magnitude, frequency, and phase, cosine and sine are identical except for a 90°phase change

Property 1: periodicity

Definition of Periodicity

-

A signal is periodic if and only if there exists a number such that:

-

The smallest such is called the fundamental period.

-

In signal analysis, a signal $$x(t)$$ is periodic if there exists a $$T_0$$ such that the signal equals itself after a time shift of $$T_0$$.

Periodicity of Sinusoidal Signals

-

A sinusoidal signal of the form:

is periodic.

-

The fundamental period of this signal is:

Property 2: time-shift = phase-change

a time shift is equivalent to a phase change for a sinusoidal signal ( )

- Time shift phase change

- Phase change time shift

- An indication: sine is cosine shifted by a quarter (1/4) of a period

Proof

So Let , then

Identically,

So Let , then

Property 3: symmetry of sinusoidal signals

Definition

Even Symmetry

- A signal is even iff

- Symmetric about the y-axis

Odd Symmetry

- A signal is odd iff

- Anti-symmetric, or symmetric about the point of origin

Even & odd decomposition

-

Any real-valued signal can be decomposed into a sum of an even signal and odd signal

-

To see it, define:

-

The original signal can be expressed as:

- Here: is the even component of the signal, is the odd component of the signal.

-

Any real-valued signal can be decomposed into its even and odd components, as defined above.

CT real exponential

-

Assume $$C > 0$$

-

a > 0$$: The exponential is **growing**.

-

a = 0$$: The exponential is a **constant** ($$x(t) = C$$).

-

-

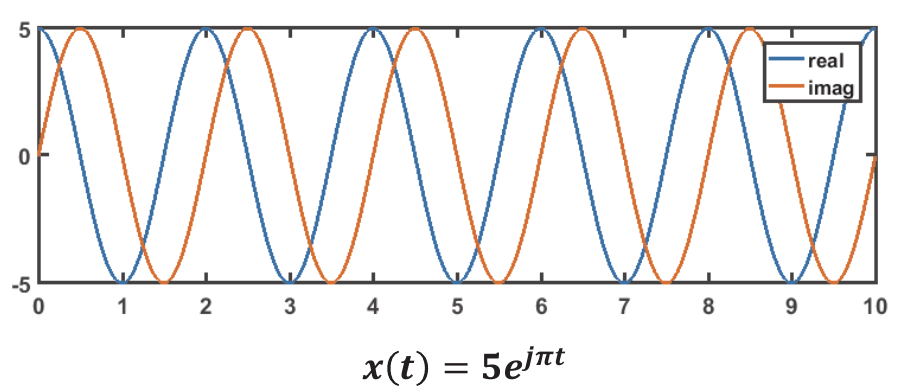

Polar form(极坐标形式) of CT complex exponential signal

- The rectangular/Cartesian form(直角坐标/笛卡尔形式) of complex exponential signal

- let

- Both the real and imaginary parts are:

- sinusoidal signals with a time-varying amplitude (envelope 包络线)

- This highlights the relationship between sinusoidal and exponentials

A special case

- If $$Re{a} = \gamma = 0$$, then

- Both real and imaginary part are pure sinusoidal signals

Signal decomposition

-

Almost all real signals can be represented by linear combinations of sinusoidal signals

-

Almost all complex signals can be represented by linear combinations of complex exponential signals

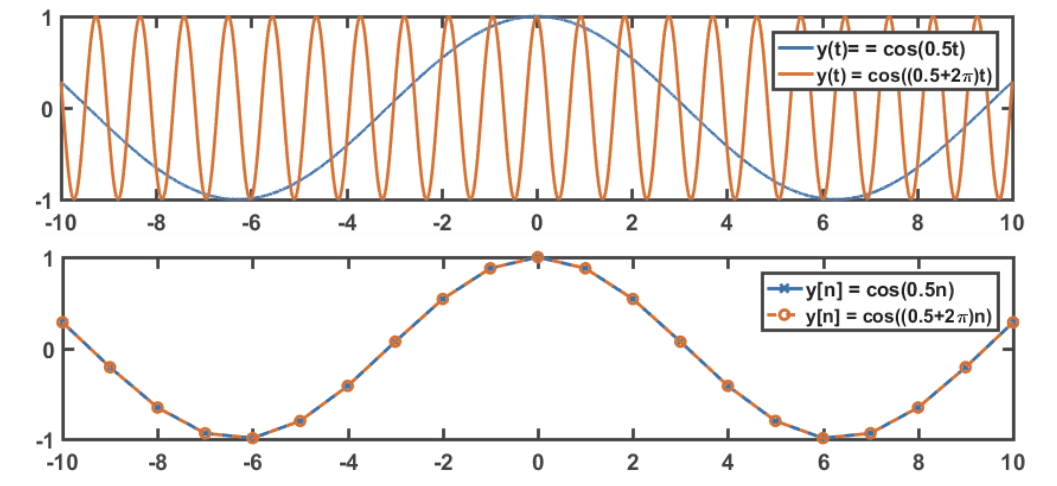

Discrete-time (DT) sinusoidal signals

- DT signals are just sampled CT signals

- Definitions and properties of DT signals are generally similar to CT signals.

- However, the discretization process can lead to the loss of certain properties inherent in the original CT signals.

DT sinusoidal

Definition

- Where:

-

A$$: Amplitude

-

\phi$$: Phase

-

lost property #1

- Phase change time shift (Reverse still holds)

- Time shift phase change

- The key point is that in DT signals, any time shift must be an integer. If the ratio of phase change and frequency is not an integer, the phase change does not imply a time shift.

lost property #2

- DT sinusoidal is not necessarily periodic

- In general, DT sinusoidal is periodic iff (the period of its CT version) is a rational number.

- When a DT sinusoidal signal is periodic, its fundamental period $$p$$ is the smallest positive integer such that $$\frac{2\pi}{\Omega_0} = \frac{p}{q}$$, where $$p$$ and $$q$$ are coprime integers (i.e., their greatest common divisor is 1).

lost property #3

- Different frequencies do not imply different signals

- This is because: $$A\cos(\Omega_0 n + \phi) = A\cos((\Omega_0 + 2\pi m)n + \phi)$$ if $$\Omega_1 - \Omega_0 = 2\pi m$$ for any integer $$m$$.

- 这就是将连续信号采样为离散信号时会出现混叠的原因

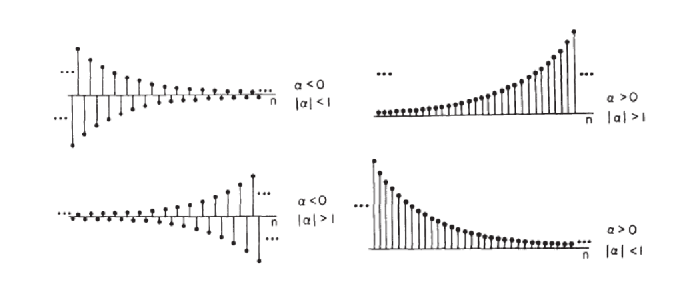

DT exponential

-

Real exponentialc

-

Complex exponential (by default)

-

DT sinusoidal signals are building blocks of DT real signals

-

DT Complex exponential signals are building blocks of DT complex signals

DT real exponential

-

Algebraic Definition:

- and are real numbers (, , ).

- Note: This is not a direct sampling of the CT exponential ().

-

Relationship to CT Real Exponential Signal:

- Case 1: If , there exists such that , and the DT signal becomes: $$x[n] = C e^{\beta n}$$

- This is a sampling of the CT real exponential.

- Case 2: If , the DT real exponential is not a direct sampling of the CT real exponential.

- Case 1: If , there exists such that , and the DT signal becomes: $$x[n] = C e^{\beta n}$$

-

Behavior of :

- Decaying Signal: If , the signal decays as increases.

- Growing Signal: If , the signal grows as increases.

- Oscillatory Signal: If , the signal alternates in sign.

-

Advantage of DT Real Exponential:

- The form increases the range of signals that can be represented by discrete-time real exponentials compared to CT exponentials.

DT Complex Exponential:

Algebraic Definition

-

Polar Form:

- and are complex numbers (, ).

-

Rectangular Form:

Let and , then: -

This represents the real and imaginary parts of the DT complex exponential.

Behavior of DT Complex Exponential

- Magnitude of :

- If , the signal grows exponentially.

- If , the signal decays exponentially.

- Frequency : Determines the oscillation frequency of the signal.

Relationship to CT Complex Exponential

- DT complex exponentials are direct samplings of CT complex exponential signals.

- However, the real and imaginary parts of the DT signal are not necessarily related by a time shift (unlike CT signals).

Envelope of the Signal

- The envelope of the signal is determined by :

- If , the envelope grows exponentially.

- If , the envelope decays exponentially.

Visual Representation

- The signal can be visualized as a combination of growing/decaying oscillations (real and imaginary parts) modulated by the envelope .

Signal Energy and Power

Finite Time Interval

Energy

-

The total energy of a signal over a finite time interval is defined by (CT or DT):

-

If is real-valued, is the absolute value.

-

If is complex-valued, is the magnitude.

Average Power (P)

-

The average power over a time interval is defined based on the energy of the same time interval divided by the length of the interval:

Entire Time Domain

Energy

-

The total energy of a signal over the entire time domain is defined by (CT or DT):

-

If is real-valued, is the absolute value.

-

If is complex-valued, is the magnitude.

Average Power

-

The average power over the entire time domain is:

Three Types of Signals

-

Signals with Finite Total Energy Over the Entire Time Domain

-

Example:

-

Total Energy

-

Average Power

-

-

Signals with Finite Average Power Over the Entire Time Domain

-

Example:

-

Average Power

-

Total Energy

-

-

Signals with Infinite Energy and Power Over the Entire Time Domain

-

Example:

-

Both Total Energy and Average Power

-

Properties of energy

- Energy does not care sign of the signal; it exists no matter the signal is positive or negative.

- Energy is also invariant under time shift and time reversal, but it changes under time scaling

Some thoughts and conclusions

- Discrete-Time Signals: Zero energy signal is zero everywhere.

- Continuous-Time Signals: Zero energy does not imply the signal is zero everywhere, as the signal can be non-zero at isolated points or over intervals of zero measure. (e.g., a finite number of points or a set with zero length)

Signal algebra

Signal manipulations by y-axis operations

Signal addition

Multiplication/division

multiply/divide at every time point

Continuous: First-order derivative of continuous-time signals

Continuous: Running integral of continuous-time signals

x(t) is the first-order derivative of y(t)

Discrete: First-order difference/first difference

Discrete: Running sum of discrete-time signal

Note that x[n] is the first difference of y[n]

If you firstly take finite difference, and then running sum, do you necessarily get back the same signal?

NO! Beacuse the constant component (DC component) will be removed!

Basic Signals

Unit Step & Unit Impulse

they are commonly used testing signals

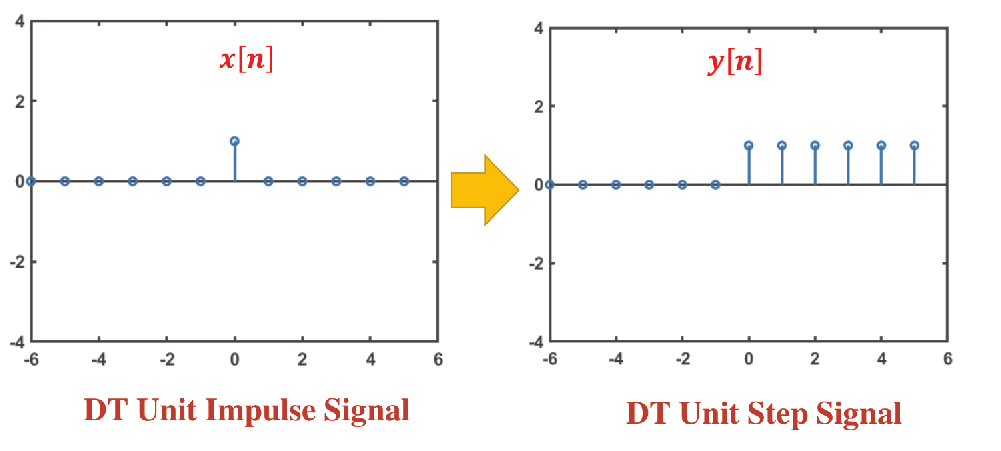

DT unit step & unit impulse

Definition

Notations for unit step signal: or

Notations for unit impulse signal: or

Properties:

- unit impulse is the first difference of unit step

- unit step is running sum of unit impulse

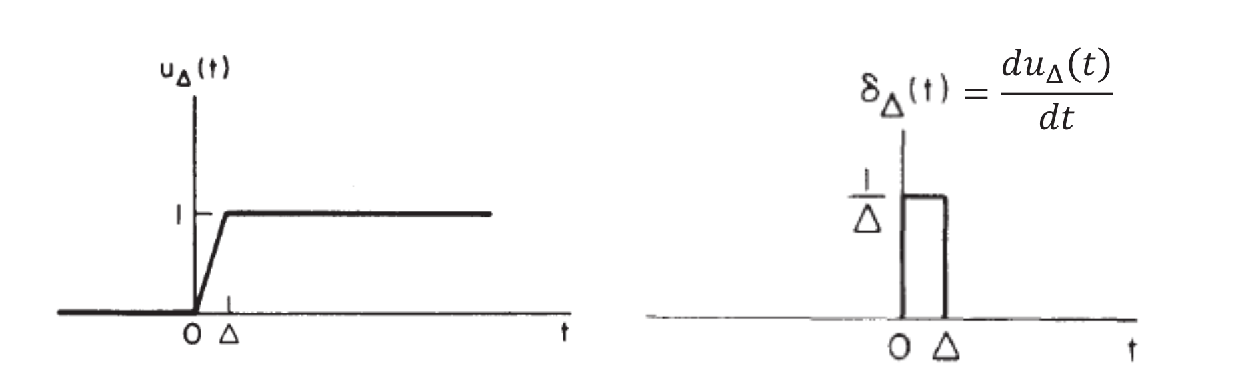

CT unit step & unit impulse

Definition

CT unit step

CT unit impulse

This is somewhat ill-defined!

So the best mathmatical definition is to use auxiliary functions

Then use the limit-of-convergence definition

- The limit-of-convergence definition ensures that the derivative-integration relationship between the unit step and unit impulse functions, by ensuring the relationship holds for and every .

- CT unit impulse has “zero width, infinite height, and area 1”.

- Graphical representation:

In conclusion, we have some good properties:

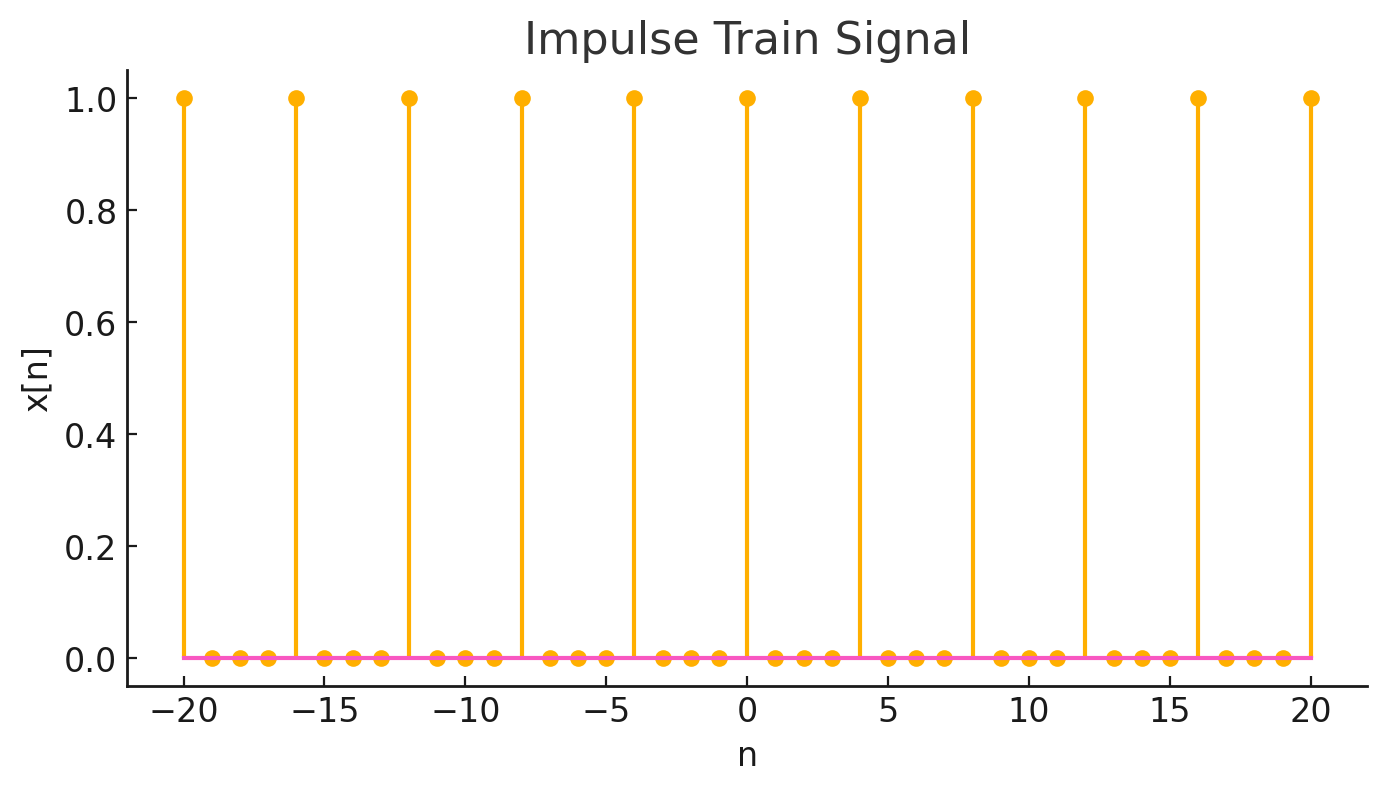

Example questions

The result is an impulse train signal

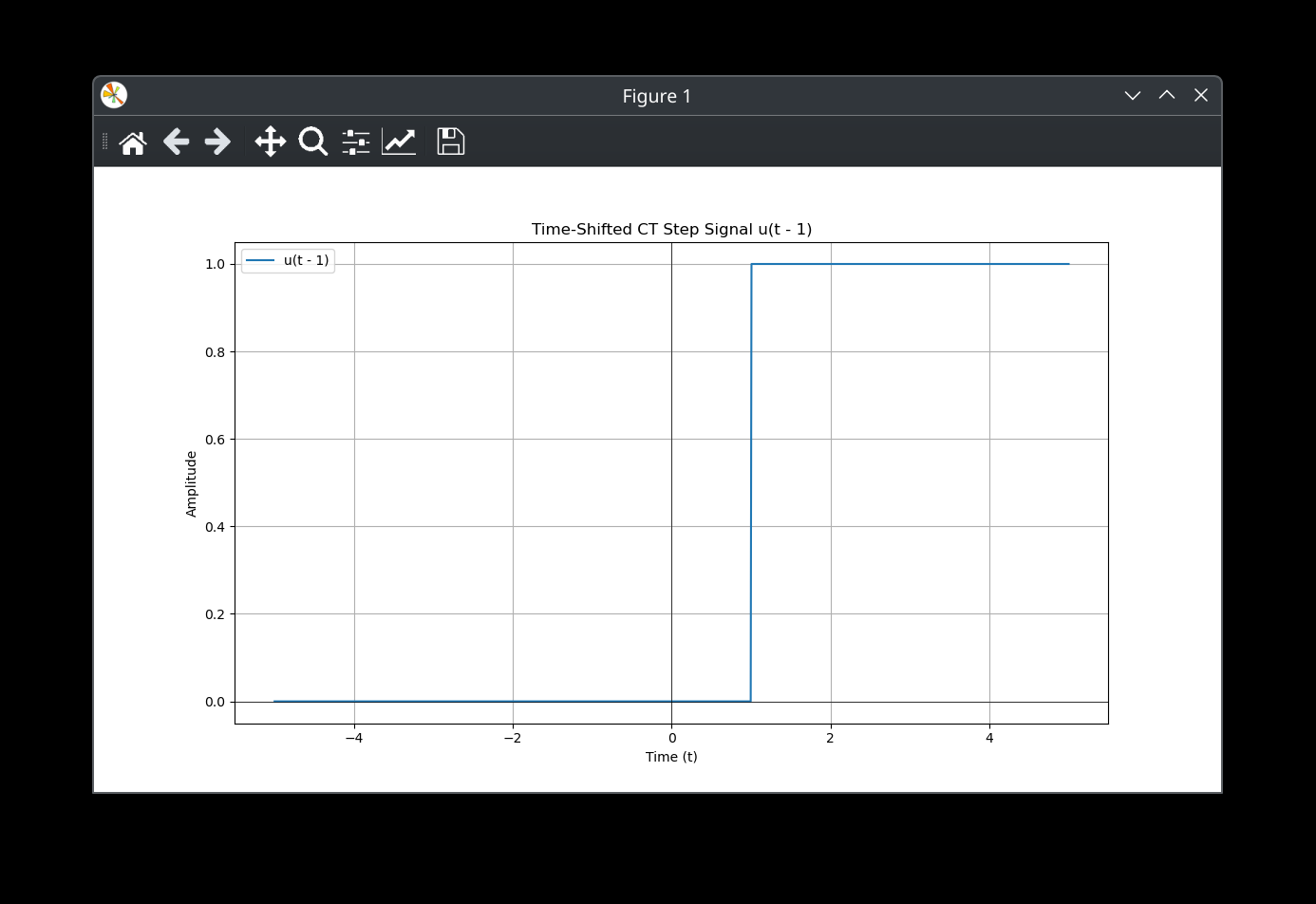

$$

x(t) = \int_{-\infty}^t \delta(\tau - 1)d\tau

$$

The result is a time shifted CT step signal

$$

x(t) = u(t-1)

$$

$$

x(t) = \int_{-\infty}^t \delta(\tau - 1)d\tau

$$

The result is a time shifted CT step signal

$$

x(t) = u(t-1)

$$

Description of systems

System Equations

-

System is often described by an equation that links each input to its corresponding output.

-

These equations can be simple; in this case we have a simple system. Some examples are:

- Time-shifting system: or

- Time-scaling system: or

- Squarer: or

- Differentiator: or

- Integrator: or

Order of system interconnections

- Series interconnection: In general, a reversely ordered series interconnection changes the original system.

- Parallel interconnection: order does not matter

- Feedback interconnection: order of course matters

System Properties

- we often describe the system by system properties, which are partial knowledge about the input-output relationship.

- Major properties include:

- Memoryless (Time-dependent)

- Causality (Time-dependent)

- Invertibility (Time-independent)

- Stability (Time-independent)

- Linearity (Time-independent)

- Time-invariance (Time-independent)

Time-dependent vs time-independent

- Time-dependent properties only considers how system behaves along the time dimension

- Time-independent properties only considers how system behaves along the signal dimension

Memoryless property

- Definition: the value of output at any time point is only dependent on the value of input at the same time point.

- Definition in a mathematical form:

Causality

-

Definition: A system is causal if the output at any given time point depends only on the input at or before that same time point. In other words, the system does not rely on future inputs to produce its current output.

-

Mathematical Formulation:

- For continuous-time systems:

y(t) \big|_{t=t_0} $$ depends only on $$ x(t) \big|_{t \leq t_0} $$ for every input $ x(t) $.

y[n] \big|_{n=n_0} $$ depends only on $$ x[n] \big|_{n \leq n_0} $$ for every input $ x[n] $.

- A memoryless system (where the output at any time depends only on the input at that same time) is always causal.

- However, the converse is not true: A causal system is not necessarily memoryless. Causal systems can have memory (depend on past inputs).

- For continuous-time systems:

Invertibility

- Definition for invertible system: there is only one input signal for every output signal

- Alternative definition: different input signals lead to different output signals

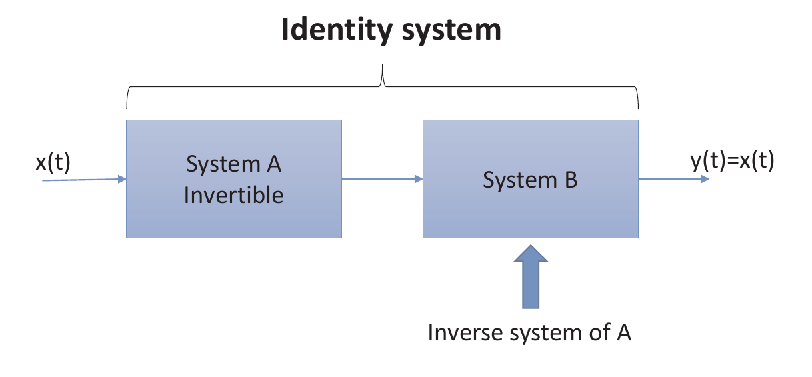

Inverse system and identity system

- For an invertible system, its inverse system is defined as the system mapping each output of the original system to its corresponding input.

- For example, if A is an running sum system, then B is an first difference system.

- Cascading a system and its inverse system forms an identity system

Stability 稳定性

-

Definition of Stability: A system is considered stable if it satisfies the BIBO (Bounded Input Bounded Output) condition. This means that for any bounded input, the output remains bounded.

-

Boundedness:

- An input is bounded if there exists a positive number such that: $$ |x(t)| < m $$ for all .

- Similarly, the output must also remain bounded for the system to be stable.

-

BIBO Stability:

- A system is BIBO stable if and only if for any bounded input, the output is also bounded.

- To prove BIBO stable, we need to show for an arbitrary bounded input, the output is also bounded

- Mathematically, if for all , then there exists a positive number such that: $$ |y(t)| < M $$ for all .

- A system is BIBO stable if and only if for any bounded input, the output is also bounded.

-

Unstable Systems:

- A system is unstable if there exists at least one bounded input that produces an unbounded output.

- To prove unstability, we need only one bounded input that generates unbounded output. (One counter-example is enough.)

- A system is unstable if there exists at least one bounded input that produces an unbounded output.

System Properties

Linearity 线性

Definition of Linearity in a System:

A system is linear if, for any two inputs and producing outputs [y_1(t)] and [y_2(t)], the following two conditions are satisfied:

-

Additivity Property: 可加性

-

Homogeneity Property: 齐次性

Partial Knowledge Gained from Linearity:

- If we know the responses of two individual signals, we can determine the response of their sum.

- If we know the response of a single signal, we can determine the response of that signal after any rescaling.

Proof or disproof

- To prove a system is linear, we need to show for any input signal(s), both the additivity and homogeneity hold.

- On the other hand, to prove a system is nonlinear, we need to show there exist some input signal(s), for which either the additivity or homogeneity does not hold.

Simplified definition for linearity(更常用)

A system is linear iff

for any inputs , , and any complex numbers and .

Generalization of Linearity

-

Concept:

- Linearity can be extended to any number of input signals.

- If a system is linear, then for any positive integer , the following holds:

Here, are the input signals, are the corresponding outputs, and are complex coefficients.

-

Key Insight: The response of a linear system to a linear combination of input signals is the same linear combination of their individual outputs.

Quick Ruling-Out of Linearity

- Zero-In Zero-Out (ZIZO) Property

- If a system is linear, it must satisfy: If for all , then for all .

- Proof: Uses homogeneity

- , where is an arbitrary signal and is its response.

- Necessary but Not Sufficient: ZIZO is a necessary condition for linearity, but not sufficient (e.g., a squarer satisfies ZIZO but is not linear).

Time-invariance 时不变性

-

Definition: For any time-shift (for CT systems) or (for DT systems) and any input signal :

-

Continuous-Time (CT) System:

-

Discrete-Time (DT) System:

-

-

Key Insight: A time shift in the input signal causes no change in the output except for an identical time shift.

解题方法

线性与时不变性的证明思路

- Proof of linearity

- Evaluating output of

- Evaluating

- If equal linear system

- Proof of Time-invariance

- Evaluating output of

- Evaluating

- If equal time-invariance

Template

-

Proving Linearity

- Define Inputs and Scalars: Let $$ x_1(t) $$, $$ x_2(t) $$ be input signals, and $$ a_1 $$, $$ a_2 $$ be complex numbers.

- Apply Superposition to Input: Let $$ w(t) = a_1x_1(t) + a_2x_2(t) $$.

- Evaluate System Response to Superposition: Compute $$ z(t) = \text{System}{w(t)}$$.

- Evaluate Superposition of Output: On the other hand, Compute $$y(t) = a_1y_1(t) + a_2y_2(t)$$, where $$ y_i(t) = \text{System}{x_i(t)} $$.

- Compare result of 3 and 4: Verify if $$ z(t) = a_1y_1(t) + a_2y_2(t)$$

- Conclusion: If equal, the system is linear; otherwise, it’s nonlinear.

-

Proving Time-Invariance

- Define Input and Time Shift: Let $$ x(t) $$ be an input signal and $$ T_0 $$ be a time shift.

- Apply Time Shift to Input: Let $$ w(t) = x(t - T_0) $$.

- Evaluate System Response to Shifted Input: Compute $$ z(t) = \text{System}{w(t)} $$.

- Evaluate Shifted Output: On the other hand, Compute $$y(t - T_0)$$, where $$ y(t) = \text{System}{x(t)} $$.

- Compare with Shifted Output: Verify if $$ z(t) = y(t - T_0) $$

- Conclusion: If equal, the system is time-invariant; otherwise, it’s time-variant

附录:数学知识补充

Triangle Inequality 三角不等式

At the same time,